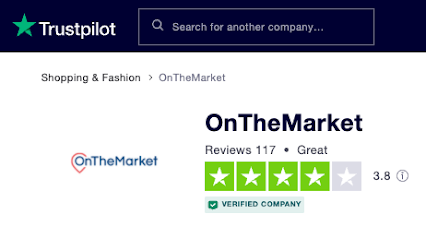

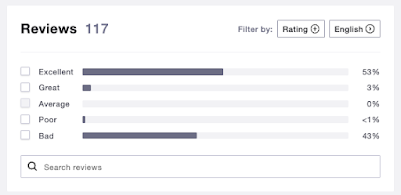

Which raised a wholly different question: how on earth does a business that has 43 percent of its reviews scoring 'Bad' (rated the lowest: one star) get a 4-star rating from Trustpilot with the accompanying accolade of 'Great'?

What we can tell you is that based on the spread of reviews shown above, both HelpHound and Google would score the business at 3.2, giving a Trustpilot rating of 'Average'.

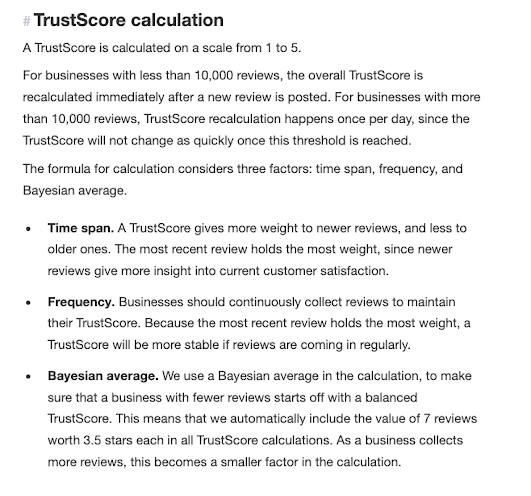

Trustpilot, in their explanation of their scoring system state as follows:

- Weighting more recent reviews. We would recommend that any review system that is going to stray from an unweighted mathematical calculation completely discount reviews that are over three years old; part of the point of reviews is that businesses should learn from them and therefore consumers should be given a snapshot of the business as it currently is, not skewed by ancient reviews. In the case of OnTheMarket, they have received 11 five-star reviews and 8 one-star reviews since 1 January 2022; any 'weighting' would struggle to find a positive outcome from those stats.

- Frequency. We have no argument in principle, but the basis of calculation or weighting should be clear. We have no clue what the sentence 'Because the most recent review holds more weight, a TrustScore will be more stable if reviews are coming in regularly.' means.

- Bayesian average. Fortuitously one of our directors used to work at HM Treasury and so is familiar with Bayesian averages. He has explained that these are usually used to smooth statistical subsets; he says that the application of a Bayesian average cannot explain why a business that should be scoring 3.2 might suddenly score 3.8. For those of a mathematical bent here is the Wikipedia entry for Bayesian averages.

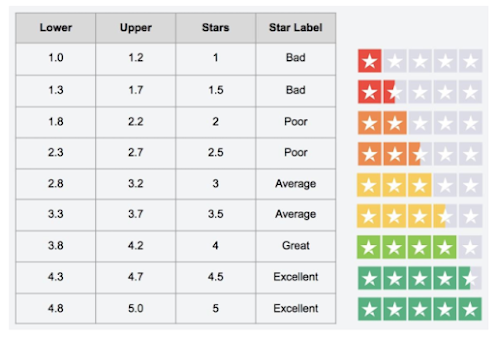

Here's their star rating and 'Excellent/Great/Good/Average/Poor/Bad' matrix:

Which illustrates the difficulty - for the consumer - inherent in such a system. Any business scoring in the range 3.8 - 4.2 is 'labelled' 'Great'. Seriously? Such a business is likely to be rated at 1* by at least twenty percent of its customers (Bayesian average or not). Would you use a doctor/lawyer/financial adviser where one in five of their customers rated them one star, bearing in mind a business cannot be rated 0 stars?

Last, but not least, this:

We have no argument with the last line, but the rest? Really? Well, yes, in the case of Trustpilot. Businesses paying Trustpilot do score better than those that don't. But that's the same with any review system - businesses that are passive invariably pick up 1* reviews from unhappy customers as they are so much more motivated. HelpHound clients invariably score 4.5+ but that's because they are excellent businesses with dedicated CRM. But, as all our regular readers know, we advise our clients to focus their efforts on their own websites and Google - in other words, where their reviews will be seen.

In Conclusion

It's best to let consumers read reviews and make up their own minds, by reading the individual reviews in conjunction with an 'unmanipulated' score, as to whether the business is good or not.

No comments:

Post a Comment

HelpHound is all about feedback, so please feel free to comment here...